Reinforce And Dreamer (2021)

Content

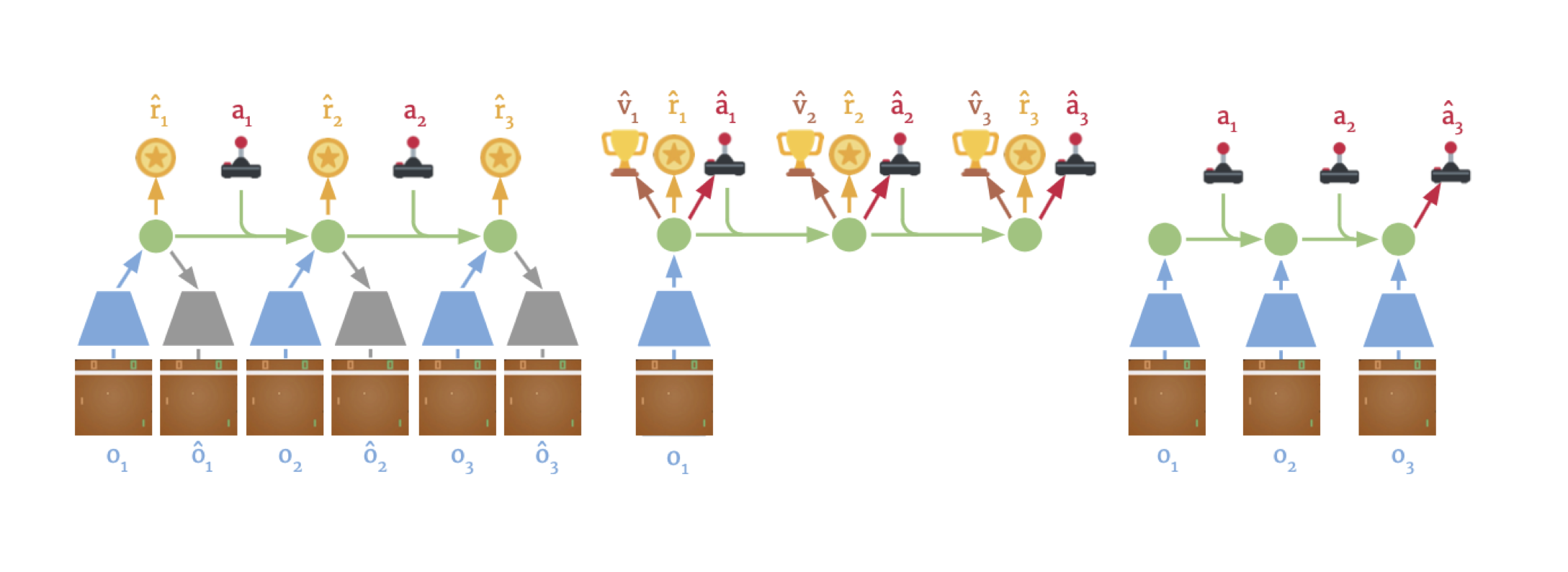

In this work, we compare the world-model based learning agent Dreamerv2 with both evolutionary strategies and the Monte- Carlo policy gradient (REINFORCE) method. Dreamer is a model-based reinforcement model that learns a so-called ’world model’. The world model is a compact latent space representation of complex environment dynamics. This world model allows the agent to learn optimal policies within this latent space before applying them in the actual environment. The learning process can thus be separated into three distinct phases: the world model learning, the actor-critic learning, and of course applying the learned dynamics in the actual environment. The model is visualized in the image below:

All models are trained either on the Pong-v0 or Pinball Atari environments. We also provide the results of a random agent as a baseline. An example frame of the Pong-v0 game can be seen below.

We compare the different implementations in terms of model complexity, training time and final performance. We further investigate Dreamers sensitivity to changing the size of categorical latent space.

Main reference: Mastering Atari with Discrete World Models